Would you like to get notifications from Christian?

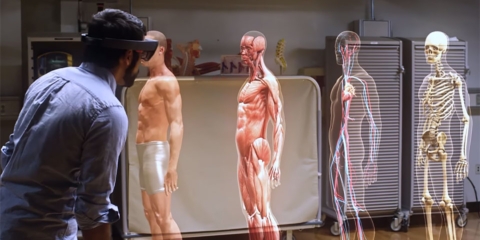

What happened? University of Washington and Google have just announced the launch of HumanNeRF, a new 3D rendering system that is able to synthesize photorealistic details of the human body in motion. This includes fine details such as cloth and facial features, as well as volumetric representations in canonical T-pose. In addition, HumanNeRF can decompose neural skeletal structures from single camera video footage. This opens up many possibilities for 3D content creation and analysis!

Why is this important? With a single-angle camera perspective, the algorithm is able to extract: photorealistic features of the body, such as fine details like cloth and face; and skeletal rigid/non-rigid decomposition. This enables content creators to 3D-print human models with unprecedented fidelity, or to create digital humans for use in games and movies. For researchers, the system could be used to study how people move or to develop new ways of tracking human motion for applications like virtual reality and robotics.

What’s next? The potential applications for this technology are virtually limitless. 3D-printing human models, developing new ways of tracking human motion, and creating digital humans are just a few of the possibilities. We can’t wait to see what creative people do with HumanNeRF!

What do you think? Let us know in the comments below.

Author: Christian Kromme

First Appeared On: Disruptive Inspiration Daily

Christian is a futurist and trendwatcher who speaks about the impact of exponential technologies like AI on organizations, people, and talents. Christian tailors his presentations to your audience's specific industries and needs.

Our world is changing at an exponential rate! A big tidal wave of digital transformation and disruption is coming at us fast. Many organizations see this wave as a threat and experience stress, but there are also organizations that just see this wave as an opportunity.

Imagine sitting with just 10-15 fellow executives at a premier location, gaining clarity on the impact of AI on your industry while enjoying an exquisite dining experience. These are not just meetings—they are transformative moments that will shape the future of your organization

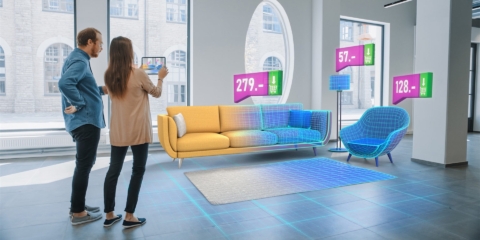

In the future, 3D printing and generative design will allow for products to be designed in a more decentralized manner, and production will take place closer to the customer and fully on-demand. 3D printing technology will also allow for more customization and personalization of products.

The agricultural industry is ripe for disruption. Robotics, AI, and IoT are all technologies that have the potential to radically transform the way we grow food. In combination with vertical farming, these technologies could increase the efficiency and quality of agricultural products.

A human-centered society is one that puts people first and where technology is used to unite and empower people. It is a society that values biological life and dignity above all else. It is a society that recognizes the importance of human relationships and works to strengthen them. In a human-centered society, all members of the community are valued and treated with respect.

The future of healthcare is here. New technologies like AI, IoT, big data, and smart sensors make it possible to become the CEO of your own health. Imagine that your phone can listen to your voice and AI algorithms can detect small nuances in the tone of your voice that indicate specific diseases.