Would you like to get notifications from Christian?

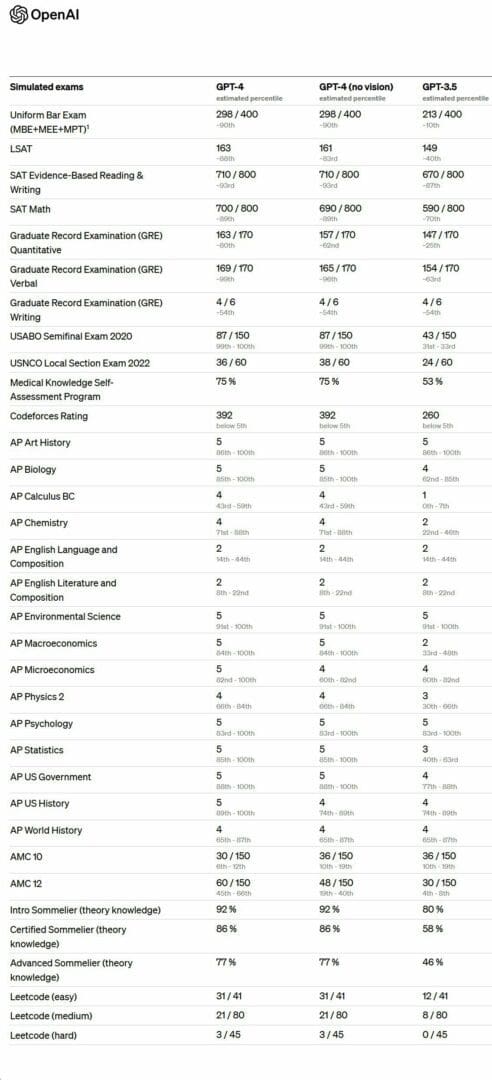

Are you ready for the next big thing in deep learning? OpenAI has just released its latest model, GPT-4, and it's setting new benchmarks for artificial intelligence. GPT-4 is a large multimodal model that can handle both image and text inputs and produce text outputs. It may not be as capable as humans in all real-world scenarios, but it exhibits human-level performance on various professional and academic benchmarks. This is a big step forward in the development of artificial intelligence, and it's something to be excited about.

GPT-4 is a Transformer-based model pre-trained to predict the next token in a document. This means that it can take in text inputs and produce text outputs with a high degree of accuracy. It can also accept image inputs, which is a significant step forward in the development of AI. GPT-4 has been tested on a variety of benchmarks, including simulating exams that were originally designed for humans. It has passed a simulated bar exam with a score around the top 10% of test takers, which is a significant achievement.

The difference between GPT-3.5 and GPT-4 may be subtle in casual conversation, but it becomes apparent when the complexity of the task reaches a sufficient threshold. GPT-4 is more reliable, creative, and able to handle much more nuanced instructions than its predecessor. This is due in part to the post-training alignment process that OpenAI used to improve GPT-4's performance on measures of factuality and adherence to desired behavior.

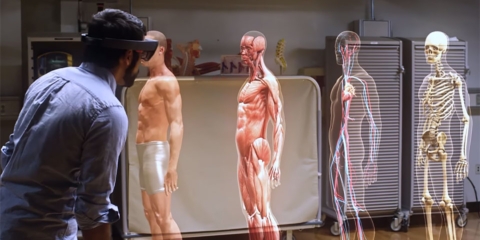

One of the most exciting features of GPT-4 is its ability to accept visual inputs. This means that it can take in both text and image inputs and produce text outputs. GPT-4 exhibits similar capabilities on image inputs as it does on text-only inputs, which is a significant step forward in the development of AI. It can also be augmented with test-time techniques that were developed for text-only language models, including few-shot and chain-of-thought prompting. However, image inputs are still a research preview and not publicly available.

Despite its capabilities, GPT-4 still has some limitations. It may "hallucinate" facts and make reasoning errors, which is a concern in high-stakes contexts. Great care should be taken when using language model outputs, and the protocol used should match the needs of a specific use-case. However, GPT-4 significantly reduces hallucinations relative to previous models, which is a step in the right direction. It scores 40% higher than OpenAI's latest GPT-3.5 on the company's internal adversarial factuality evaluations.

The Bar Exam: 90%, LSAT: 88%, GRE Quantitative: 80%, Verbal: 99%, Every AP, the SAT...

OpenAI is collaborating closely with a single partner to prepare the image input capability for wider availability. It's also open-sourcing OpenAI Evals, their framework for automated evaluation of AI model performance. This will allow anyone to report shortcomings in their models to help guide further improvements. This is a significant step forward in the development of AI, as it will help to create a more transparent and collaborative process.

The release of GPT-4 is a significant milestone in the development of artificial intelligence. It's a large multimodal model that can handle both image and text inputs and produce text outputs. While it may not be as capable as humans in all real-world scenarios, it exhibits human-level performance on various professional and academic benchmarks. This is a big step forward in the development of AI, and it's something to be excited about. The ChatGPT 4 version will first be available for paying Plus users and perhaps later for free users as well.

Author: Christian Kromme

First Appeared On: Disruptive Inspiration Daily

Which keynote best fits your needs?

Christian is a futurist and trendwatcher who speaks about the impact of exponential technologies like AI on organizations, people, and talents. Christian tailors his presentations to your audience's specific industries and needs.

Our world is changing at an exponential rate! A big tidal wave of digital transformation and disruption is coming at us fast. Many organizations see this wave as a threat and experience stress, but there are also organizations that just see this wave as an opportunity.

Imagine sitting with just 10-15 fellow executives at a premier location, gaining clarity on the impact of AI on your industry while enjoying an exquisite dining experience. These are not just meetings—they are transformative moments that will shape the future of your organization

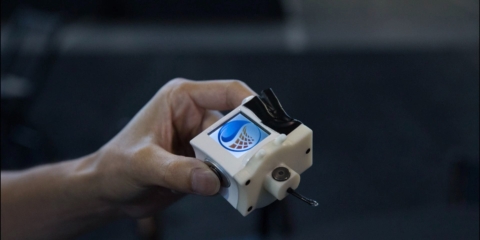

In the future, 3D printing and generative design will allow for products to be designed in a more decentralized manner, and production will take place closer to the customer and fully on-demand. 3D printing technology will also allow for more customization and personalization of products.

The agricultural industry is ripe for disruption. Robotics, AI, and IoT are all technologies that have the potential to radically transform the way we grow food. In combination with vertical farming, these technologies could increase the efficiency and quality of agricultural products.

A human-centered society is one that puts people first and where technology is used to unite and empower people. It is a society that values biological life and dignity above all else. It is a society that recognizes the importance of human relationships and works to strengthen them. In a human-centered society, all members of the community are valued and treated with respect.

The future of healthcare is here. New technologies like AI, IoT, big data, and smart sensors make it possible to become the CEO of your own health. Imagine that your phone can listen to your voice and AI algorithms can detect small nuances in the tone of your voice that indicate specific diseases.