Would you like to get notifications from Christian?

What happened?

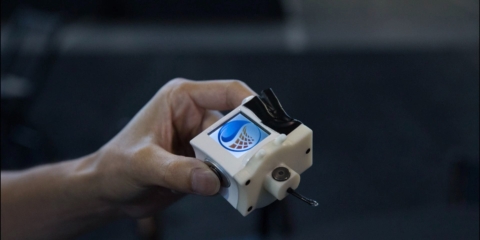

If you thought Google's surveillance capabilities were creepy before, they're about to get even creepier. The tech giant has developed a new AI system that can read your body language and predict what you're going to do next—without even needing cameras. That's right, Google's new technology can interpret the way you move, from the tiniest twitch of your fingers to the slightest shift in your posture, and use that information to divine your intentions. And it's all thanks to a little something called Project Soli.

Read the entire article here: https://bit.ly/3q9KioK

Why is this important?

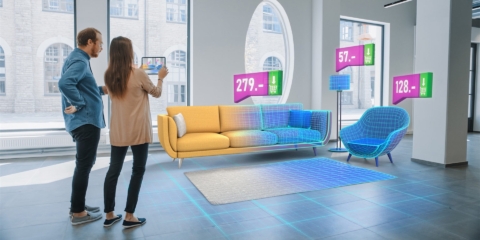

What if your computer decided not to blare out a notification jingle because it noticed you weren’t sitting at your desk? What if your TV saw you leave the couch to answer the front door and paused Netflix automatically, then resumed playback when you sat back down? What if our computers took more social cues from our movements and learned to be more considerate companions? It sounds futuristic and perhaps more than a little invasive a computer watching your every move? But it feels less creepy once you learn that these technologies don’t have to rely on a camera to see where you are and what you’re doing. Instead, they use radar. Google’s Advanced Technology and Products division better known as ATAP, the department behind oddball projects such as a touch-sensitive denim jacket—has spent the past year exploring how computers can use radar to understand our needs or intentions and then react to us appropriately. Recently, radar sensors were embedded inside the second-generation Nest Hub smart display to detect the movement and breathing patterns of the person sleeping next to it. The device was then able to track the person’s sleep without requiring them to strap on a smartwatch.

Christian is a futurist and trendwatcher who speaks about the impact of exponential technologies like AI on organizations, people, and talents. Christian tailors his presentations to your audience's specific industries and needs.

Our world is changing at an exponential rate! A big tidal wave of digital transformation and disruption is coming at us fast. Many organizations see this wave as a threat and experience stress, but there are also organizations that just see this wave as an opportunity.

Imagine sitting with just 10-15 fellow executives at a premier location, gaining clarity on the impact of AI on your industry while enjoying an exquisite dining experience. These are not just meetings—they are transformative moments that will shape the future of your organization

In the future, 3D printing and generative design will allow for products to be designed in a more decentralized manner, and production will take place closer to the customer and fully on-demand. 3D printing technology will also allow for more customization and personalization of products.

The agricultural industry is ripe for disruption. Robotics, AI, and IoT are all technologies that have the potential to radically transform the way we grow food. In combination with vertical farming, these technologies could increase the efficiency and quality of agricultural products.

A human-centered society is one that puts people first and where technology is used to unite and empower people. It is a society that values biological life and dignity above all else. It is a society that recognizes the importance of human relationships and works to strengthen them. In a human-centered society, all members of the community are valued and treated with respect.

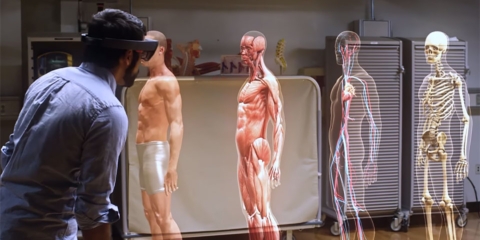

The future of healthcare is here. New technologies like AI, IoT, big data, and smart sensors make it possible to become the CEO of your own health. Imagine that your phone can listen to your voice and AI algorithms can detect small nuances in the tone of your voice that indicate specific diseases.