Would you like to get notifications from Christian?

Algorithms, AI, and Big data are all providing new ways to make better, faster, and data-driven decisions. But it comes with a heavy price! We have lost control and the ability to understand how these systems make decisions that impact our lives. Even more concerning is that we have become complacent and comfortable with this lack of understanding. We have given up our agency to hold these systems accountable. But what if there was a way for AI to explain its decision-making process? Would that make you more comfortable with trusting AI?

It’s no secret that artificial intelligence is becoming increasingly sophisticated every day. What used to be the stuff of science fiction is now a reality, thanks to algorithms that can make sense of vast amounts of data. However, with this great power comes responsibility: as AI becomes more ubiquitous, we need to ensure that its decisions are transparent and accountable. A team of researchers from PRODI at Ruhr-Universität Bochum is developing a new approach to do just that.

The team has been working on a way to make artificial intelligence more accountable for its decisions. Currently, AI makes decisions based on algorithms that are opaque to us humans. This lack of transparency can lead to mistrust and a feeling of powerlessness. The team’s goal is to develop a new approach to make AI’s decision-making process transparent, so we can trust it.

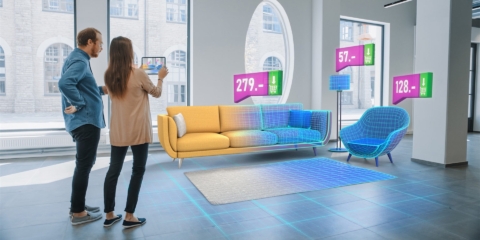

The approach the team is developing would allow AI to explain its decisions in a way that is understandable to humans. For example, if you were to use an AI-powered app to book a hotel room, the app could explain how it chose the room it did based on your preferences. This would give you a better understanding of how AI works and help you to make informed decisions about using AI in the future. Or what about an AI algorithm diagnosing deadly diseases? If the algorithm could explain its decision to a doctor and patient, it would help to build trust and confidence in its ability to make accurate diagnoses.

The team is still working on the approach and has not yet implemented it in any real-world applications. However, they are hopeful that their work will lead to a new era of transparency and accountability for AI. In a world where AI is becoming increasingly commonplace, this is a crucial step to ensure that we can trust the decisions it makes.

Do you think this new approach would be beneficial? Would you feel more comfortable using AI if it could explain its decision-making process? Let us know your thoughts in the comments below!

Author: Christian Kromme

First Appeared On: Disruptive Inspiration Daily

Christian is a futurist and trendwatcher who speaks about the impact of exponential technologies like AI on organizations, people, and talents. Christian tailors his presentations to your audience’s specific industries and needs.

Embracing the advancements of technology and AI can enhance our humanity. We can focus on developing our unique talents and skills by automating mundane tasks and freeing up our time. As humans, we can adapt and learn, allowing us to evolve and stay relevant in a rapidly changing world constantly.

Organizations will need to be more fluid, dynamic, and adaptable: the ability to change and adjust in response to new situations and environments. We are on the cusp of a new era of organizations, ones that are more fluid and agile and which behave like swarms we see in nature.

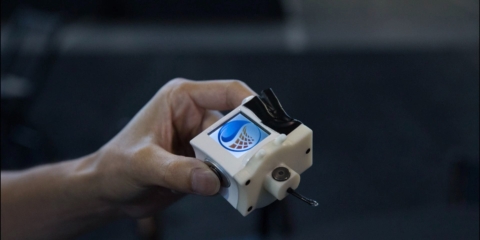

In the future, 3D printing and generative design will allow for products to be designed in a more decentralized manner, and production will take place closer to the customer and fully on-demand. 3D printing technology will also allow for more customization and personalization of products.

The agricultural industry is ripe for disruption. Robotics, AI, and IoT are all technologies that have the potential to radically transform the way we grow food. In combination with vertical farming, these technologies could increase the efficiency and quality of agricultural products.

A human-centered society is one that puts people first and where technology is used to unite and empower people. It is a society that values biological life and dignity above all else. It is a society that recognizes the importance of human relationships and works to strengthen them. In a human-centered society, all members of the community are valued and treated with respect.

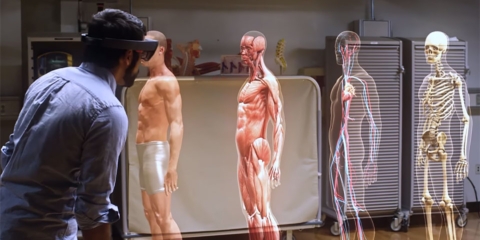

The future of healthcare is here. New technologies like AI, IoT, big data, and smart sensors make it possible to become the CEO of your own health. Imagine that your phone can listen to your voice and AI algorithms can detect small nuances in the tone of your voice that indicate specific diseases.